What was once used by the US military as ARPANET (Advanced Research Projects Agency network) is today known as the Internet. With data grew from few gigabytes to 1.2 million terabytes today. In 1995 the internet was used by 16 million users. Today there are more than 4600 million users on internet and numbers are growing with each passing second. The last two years alone has made up for 90 per cent of the internet data today.

This growth of internet users and their information has increased the data storage exponentially. Whatever you do on the internet, you will leave a digital trail. Even a random search by a random user will count in internet trend and affects the indexing of search engines. The data servers are now occupying space of football fields. Major companies like Google, Amazon etc are providing with cloud computing and cloud storage services to tap internet users’ data storage demand. With the need to store replicate data in case of natural catastrophe; more space is consumed by dedicated servers.

The surfing of the internet as much as it can be fun for regular users like us, for data scientists and businesses that desire some relevant information can become an uphill task. To find a needle in a haystack is easier than finding desired data on internet manually. The amount of data created and stored by a single large company is so vast that private data centres are employed. By this, we can envision how much data is available on the internet.

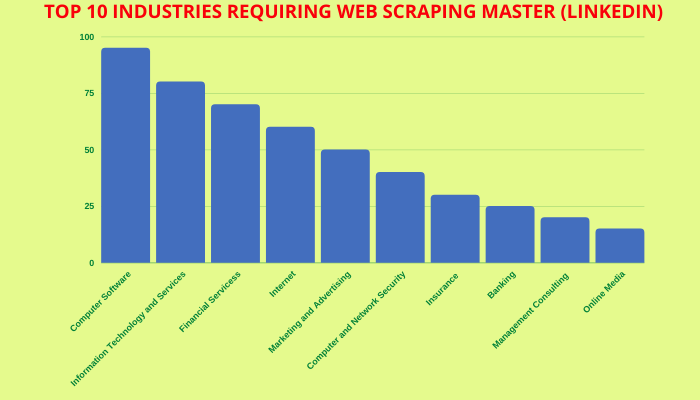

The role of data science, data mining and data scraping has increased tremendously. Web scraping services are used majorly for data extraction and data analysis. Web scraping is used for diverse purposes like business competition, research and analysis, consumer insights, security purposes, government purposes etc.

What is Web Scraping?

The extraction of data from websites is called web scraping or web harvesting. The specific data is copied from websites to local database or spreadsheet. Web scraping services or data scraping services use hypertext protocol or Extensible hypertext protocol for data extraction. The scraping can be done manually by visiting the particular page and copying data manually into a spreadsheet.

The manually scraping is possible when we are working for personal usage and data we are working with is limited. When we are dealing with a large amount of data an automated process is essential. It is implemented using a bot.

Web scraping and web crawling often mistaken for same but are different. Web crawling is done by search engines for indexing of hyperlinks, whereas web scraping does the data extraction. Web crawling is used in web scraping for fetching pages.

The websites now a day are highly advanced with using gifs, scripts, flash animations etc in an integrated ecosystem. Websites are developed, keeping human in mind, not bots therefore data extraction become a challenging task. The data extraction is based on the data stored by websites in text form. The mark-up languages such as HTML and XHTML are used for the development of a basic framework for any website. The specialised software use this rich text data for extraction.

There are simple plug-ins such as Scraper, Data Scraper for Google chrome used for web scraping. There specialised software such as ParseHub, OutwitHub, etc employed for slightly advance level of web scraping. The major e-commerce companies such as Amazon and social networking companies such as Facebook provide their APIs (Application programming interface) for public data extraction.

AI is a necessary evil in data scraping. The quantity of data has forced the implementation of AI. The AI as helpful it can be, unnerve people with wild sci-fi fantasy that pales the Matrix trilogy in comparison.

The legality of web scraping

“Just like the wild west, the Internet has no rules”. The times have changed in the wild west and on the Internet. The computer fraud and abuse laws criminalise any act of breaking into any private computer systems and accessing non-publically available data. In 2016 hiQ Labs, a data science company web scrapped the publically available LinkedIn profiles. LinkedIn terms this as a violation of the company’s policy on data usage without permission and authorization. The hiQ took LinkedIn to court. In a landmark judgement for web scraping legality, the court ruled in favour of hiQ stating, “web scraping of public data is not a violation of computer fraud and abuse act.”

The morality of web scraping

The web scraping is used in business for online price monitoring, price comparison, product review data. The real estate companies use it to gather competitor real state listing. The websites use other website public data for their convenience without having to work for it. The web scraping lies in the grey area of morality where few times its use cab be justified with internet policy and sometimes complete violation of basic internet ethics.

If you are searching for cheaply available phones with a certain price range and use web scraping tool on a major e-commerce website for data extraction then it quite ethical and can be justified. When you extract data for a content-based site with its USP being uniquely available content such as blogging websites and created a mirror site then it cannot be justified.

A basic moral conscience is necessary for making a righteous judgment in the age of the internet where the lines are quite blurred.

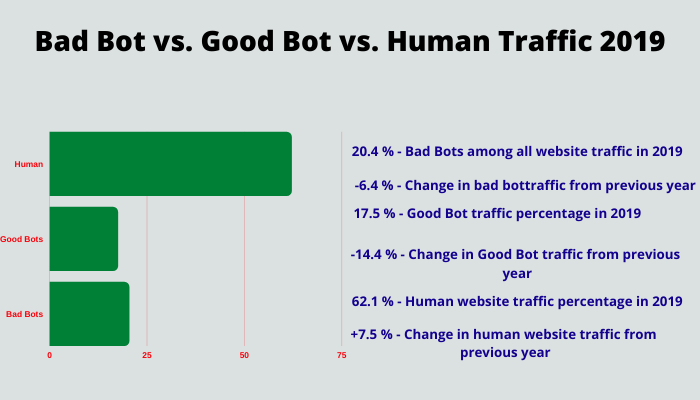

Concept of the good bot and bad bot

The supporting of web scraping often linked with freedom of the internet and fair use of public data but the picture is not as rosy as it seems. There are many bad bots ie malicious automated software available which can steal data by breaking into user accounts, overload servers with providing junk data and harm websites.

The AI bot gets a bad reputation due to malicious bot crawling the internet space. Many websites prohibit web scraping. The websites use advance tools for bot detection and prevent them from viewing their pages. This solution to this is the use of DOM parsing, simulation of human behaviour etc to extract data from sites.

Does it require adding a magic touch?

We are leaving in the age of artificial intelligence. It is the intelligence demonstrated by machines. The machines are incapable of thinking by themselves. The highly complex software is used to develop machine intelligence that learns, adapt and collects data. The AI is now used in several areas from traffic regulation, pilot training in the aviation industry, critical fields such as nuclear reactors etc. The AI has made possible rooming of the rover on Mars.

People are apprehensive of AI and believing a new world order where machine rules human. These make up for a good sci-fi script or story but the reality is too mechanical. AI has made it possible to work in an environment where humans could not survive. The sensitive area such as military, national security etc relies on AI for information processing. Human lives depend on AI proper working.

The internet is brimming with boundless data. The manual data extraction can be tedious in past but with data storage reaching in terabytes, it is nearly impossible. We have to implement AI for web harvesting and data mining services. The AI can extract store and process data from thousands of pages in a few seconds. The manual scraping does only a few hundred pages in days. The AI has made it possible to scrape websites with a gigantic database and analyses it for forming business strategies and predictions.

Does that mean the AI has replaced human in web scraping area at least? Well, the answer is not binary. The AI does a spectacular job in web harvesting but the human touch is indispensable. When data is extracted just like an ore is extracted. It has to go through various processes of floatation, smelting etc to be useful. The data gathered from the site could be repetitive, redundant and in the wrong format. When we are extracting this kind of data we are overloading storage with unnecessary data. Data verification and data scrubbing will cleanse the inaccurate and corrupt records from the extracted data. These are quite state of art tools but the ultimate power lies in the hands of a human.

The intelligence of the machine is called artificial for a reason. The AI extracting data cannot determine its necessity for a purpose like a human does. Let us suppose a company want to launch a new clothing line for teenage girls. They are extracting data for what teenage girls find fashionable. Many times websites want to remain on the forward listing of search engines pages and use the metadata incorrectly. The AI being AI will extract the data for teenage fashion and data will imply something else.

Many websites prevent web crawling by using CAPTCHAS, embedding information in media objects, login access requirement, changing website HTML regularly etc. The AI right now cannot trespass these mechanisms of prevention of web scraping.

In a situation like these, human touch became essential. As they say, “The artificial intelligence has the same relation to intelligence as an artificial rose is to real rose.”

No comments:

Post a Comment